Outils pour utilisateurs

Outils du site

Panneau latéral

Table des matières

Description technique détaillée (fiche B)

Résumé/summary

Internet est aujourd’hui reconnu et utilisé comme un support très économique pour la diffusion d’informations à large échelle. Les différentes techniques de diffusion d'informations sur Internet peuvent être distinguées par leur degré de contrôle sur l’origine et la qualité de l’information, leur précision de diffusion (la fraction d’utilisateurs intéressés par l’information diffusée) et le décalage de diffusion (le temps nécessaire pour la découverte d'une nouvelle information par les utilisateurs intéressés). Par exemple, les messages “spam” sont non contrôlés, peu sélectives et sans décalage. Les forums de “news” améliorent la précision de diffusion mais nécessitent souvent la modération par un humain pour assurer la qualité de l’information diffusée. Les pages web permettent de garantir l’origine (le site) et la qualité de l’information, mais souffrent d’un décalage de publication important dû au temps de rafraîchissement des moteurs de recherche.

De nombreux sites web appliquent le principe de la « Syndication Web » pour diffuser des informations nouvelles. Ce principe désigne un ensemble de technologies fondées sur des formats XML (RSS, Atom) et l'approche « publication/souscription » (publish/subscribe) pour la diffusion contrôlée et efficace d'informations sur le web. Les fournisseurs d'information diffusent l'apparition de nouvelles informations (par exemple un article dans un journal électronique) à travers des flux (feeds) RSS ou ATOM auxquels les clients intéressés peuvent s’abonner grâce à des portails web ou des logiciels (lecteurs RSS/ATOM) spécialisés. Ce processus permet au final à chaque utilisateur de créer son espace d’information personnalisé qui surveille « en temps réel » et d'une manière ciblée l'évolution d'informations professionnelles, commerciales, associatives et personnelles publiées sur le Web.

Le projet ROSES (Really Open Simple Efficient Syndication) veut généraliser le principe de la syndication web aux bases de données pour réaliser et étendre les services proposés par les portails ou logiciels de syndication actuels. Il envisage d'explorer deux directions de recherche en particulier :

- Les services de syndication actuels sont encore très limités et permettent essentiellement le filtrage par mots clés, la concaténation et le tri temporel de flux. Un premier objectif du projet est de définir et de réaliser des services d’agrégation, de personnalisation et d'enrichissement pour faciliter l'exploitation et la génération de flux RSS. La réalisation de ces services sera fondée sur des techniques d’intégration de données XML et le langage XQuery.

- Le nombre de flux RSS et d'utilisateurs accroît tous les jours et les portails d'agrégation spécialisés comme Blastfeed.com, Plazoo.com et Technorati.com sont de plus en plus confrontés à des problèmes de passage à l'échelle. Ainsi, le nombre de flux indexés par http://technorati.com/ double approximativement tous les six mois et a atteint 36 millions de flux en avril 2006 ce qui correspond 50 000 publications par heure. Le projet ROSES envisage d’appliquer et d’étendre des techniques d’évaluation et d’optimisation de requêtes sur des données distribuées dans le contexte de la syndication web. En particulier, il étudiera le déploiement de services de syndication dans une infrastructure distribuée P2P.

Au niveau industriel, l’objectif est d’étudier différentes applications fondées sur les flux RSS et de définir les infrastructures et les services adaptés à ces applications.

Introduction

The ROSES project aims at defining a set of web ressource syndication services and tools for localizing, integrating, querying and composing RSS feeds distributed on the Web. We distinguish between two kinds of services :

- Feed Management Services :

- catalogue

- acquisition, storage and refresh

- basic filtering (keyword) and notification

- Feed Composition Services :

- advanced filtering and querying (temporal, structured, multi-channel)

- feed aggregation and data integration views

- ranking and top-k queries

Technical and scientific challenges

Whereas RSS documents can be considered as a special kind of XML-RDF document that can be queried by any existing XML (XQuery, XPath) or RDF (Sparql) query language1), the combination of RSS syndication, XML query processing and distributed data and query processing creates new technical and scientific challenges that we intend to tackle in this project :

- RSS data model and algebra : RSS feeds are ordered sequences of XML documents encoding a flow of time-stamped messages called items. Each item generally (but not necessarily) annotates an “external” web ressource identified by a URL. From this point of view, aggregating RSS feeds corresponds to querying (virtually infinite) sequences of items temporarily available in specific XML documents identified by a feed address. An important objective in this project will be the definition of a formal RSS data model and algebra with the precise semantics in terms of operations on time-stamped XML document sequences.

- RSS feed management : We will study the definition and implementation of basic RSS feed management services (store,refresh,filter,notify) based on the RSS data model and existing technology for storing and querying (XPath/Xquery) XML documents. In particular we intend to evaluate and extend an existing RSS aggregation system (Blastfeed) built on top of an XML web-datawarehouse (Xyleme).

- Distribution and optimization : RSS syndication is generally implemented in terms of a traditional two- or three-level client/server architecture where RSS feeds are aggregated directly by the user client or indirectly by an intermediate web portal. Whereas this kind of architecture might be sufficient for many use cases, we believe that RSS syndication “naturally” fits into a completely distributed architecture connecting clients, feed producers and feed aggregators. Distribution brings many well-known advantages (ressource sharing and load balancing, high availability through replication, …) to RSS syndication applications, if it is combined with efficient data replication and query evaluation strategies. One challenge in this project will be to study various optimization problems related to the distributed storage and aggregation of RSS feeds. The proposed techniques will be based on existing data replication, load balancing and query optimization techniques for distributed XML data. In particular, we will study these problems in the context of a P2P architecture, where each peer might play the role of a client, feed producer and feed aggregator.

- Dynamic feed aggregation and generation : Feed aggregation consists in choosing and merging RSS feeds. This process might be guided according to some specific user interests (profile), local data and ranking score (credibility, relevance, importance). Based on a well-defined model and query language for simultaneously querying XML data and RSS feeds, it is possible to define new powerful RSS feed aggregation and data management services. In particular, it is possible to define dynamic personalized RSS/XML views filtering and composing existing feeds and external data. For example, personalized feed aggregation might consist in joining incoming RSS feeds with user profiles stored in form of simple XML documents. The same mechanism can be used to generate new enriched feeds from existing feeds and external data. Feed-specific relevance and popularity scores allow to rank feeds and their items and to apply top-k query processing algorithms.

Summary of key issues

This project addresses the following technical and scientific key issues combining XML query processing, distribution and information flows :

- XML-RSS data model and algebra

- Declarative (query-based) RSS feed aggregation

- Distributed XML query processing

- Data replication and load balancing

- XML-RSS syndication views

- RSS feed and item ranking models and algorithms

Economical benefits and issues

Similar to search engines which already play an important role in the modern information society, web syndication gains more and more importance at the economic level. One explication for the success of web content syndication is the observation that a big amount of information published on the web is a time-stamped, uniquely identified chunk of data with meta-data (news stories, uploaded photos, events, podcasts, wiki changes, source code changes, bug report). The possibility to create, observe and aggregate well-defined information channels on the web allows to reduce the distance (cost, time, effort) between information producers and information consumers at the web-scale :

- Media companies (TV, radio, press) use web syndication for publishing their contents to their clients who can build their personalized media space by choosing and aggregating topic specific feeds according to their interests.

- Electronic commerce applications use web syndication for linking products to potential clients who “actively” choose to be informed about the evolution of existing and the appearance of new products in the catalogue 2).

- Electronic auction systems like Ebay allow clients to be informed about the bidding process concerning objects they are intereste in

- More generally, RSS syndication can be used for observing, filtering and agregating “external” web information according to some specific economic domain (veille technologique, economique)

The ROSES project aims at developing a flexible and efficient web syndication model for building this kind of applications. Flexibility and efficiency is achieved by a high-level syndication model based on declarative languages and distributed XML data management technology .

Contribution with respect to the ANR call for projects

This proposal answers to several priorities and objectives mentioned in the MDCO programm of the ANR call for projects. The main objective is to develop a web information management infrastructure combining distributed XML data management and RSS web ressource syndication. The project takes into account several important dimensions of web information :

- Distribution and volume : The increasing number of web sites and web ressources

RSS syndication is used for reducing the “publication lag” of web ressources RSS feeds are XML documents distributed all over the web and the number of RSS feeds is growing every day.

- Flexibility :

The main research topics concerning this project are mentioned in “Axe 2 : Algorithmes pour le traitement massif de données ” (page 8) :

- XML, blogs, fils de discussion

- données distribuées (web, P2P)

- traitement et optimisation de requêtes distribuées

- flux de données (prise en compte de l'évolution)

- les échelles temporelles

Expected results and contributions

The main expected results and contributions are :

- a general web syndication infrastructure for describing different centralized and distributed syndication scenarios

- a formal XML-based RSS feed model and algebra

- a programming API and environment providing basic feed management services

- distributed query evaluation, data replication and load balancing algorithms for RSS feed data

- high-level view language for dynamic RSS feed generation, enrichment and personalisation

- new efficient filtering and ranking techniques for RSS feed data

Summary

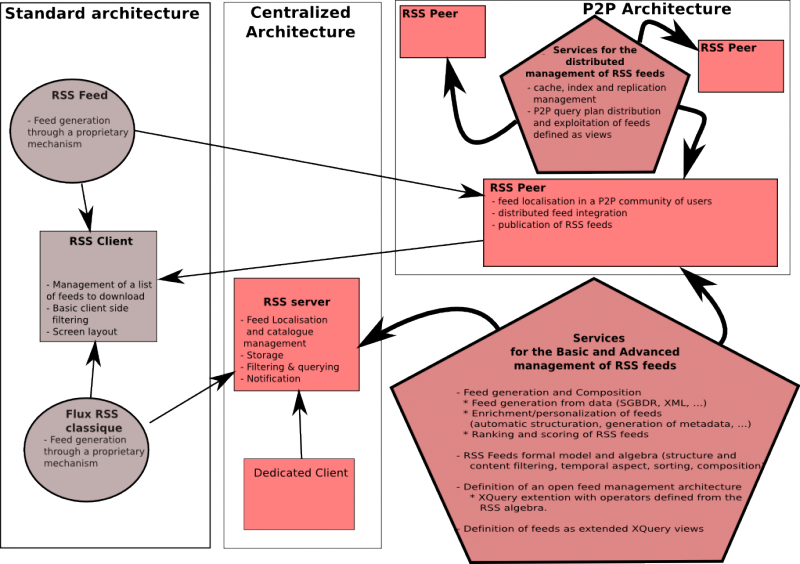

The following figure summarizes the technical and scientific contribution of the proposed project and its integration with existing technology. The right part of the figure (gray) shows the most simple way of RSS-based web ressource syndication. A feed is an evolving XML document downloaded by a specialized user interface (RSS reader). On the rest of the figure shows the architectures we will study in our proposal.

Consortium description

The project consortium is composed of four research groups (Wisdom-LIP6, Wisdom-CNAM, LSIS, PRISM) and one industrial partner (2or3things). All participants have a long complementary experience in distributed data management focused on XML data and peer-to-peer architectures:

| Expertise | WISDOM LIP6 | WISDOM CNAM | LSIS | PRISM | 2or3things | |

|---|---|---|---|---|---|---|

| XQuery implementation | x | x | ||||

| XML data warehousing | x | x | x | |||

| P2P query mediation and view-based data integration | x | x | ||||

| semantic web and RDF/XML metadata management | x | x | ||||

| data replication and distributed transaction management | x | |||||

| P2P query routing and load balancing | x | |||||

| data and service ranking algorithms | x | |||||

| data stream query processing | x |

Existing collaborations

LSIS, PRISM, LIP6 and CNAM particpate in the ACI MD SemWeb project (2004-2007, http://bat710.univ-lyon1.fr/~semweb/). ROSES can be considered as a follow-up project of SemWeb, concentrating on a particular kind of “semantic web” data (RSS feeds).

LIP6 and CNAM are also involved in the Plan Pluri-Formation (PPF) Wisdom (started in 2006) which brings together the research activities of three database research groups (LIP6, CNAM, Univ. Dauphine). The ROSES project consolidates the collaboration between CNAM and LIP6 about web information publishing and dissemination.

LIP6 and PRISM participate in the RNTL Webcontent project (2006-2008, http://www.webcontent.fr/) defining and implementing an open platform for building industrial semantic web applications. The ROSES project might offer an additional testbed for validating the WebContent platform in the future.

Participant list

Wisdom

Web site : http://wisdom.lip6.fr

Description : Wisdom is a “Programme Pluri-Formation” (PPF) between the database research groups of the Conservatoire National des Arts et Métiers (CEDRIC Laboratory), the University Paris 9 Dauphine (LAMSADE Laboratory) and the Université Paris 6 Pierre et Marie Curie (LIP6 Laboratory). It officializes the long standing collaboration on the topics of web information publishing and content-based image retrieval. Two Wisdom members participate in the project :

- The LIP6 Database Research Group (http://www-bd.lip6.fr) has a long research experience in distributed data management. Its current activities concern issues related to distributed data management in large-scale distributed environments such as the web and P2P networks. The main research domains concern distributed ranking algorithms for data and services, freshness-aware data replication and transaction routing strategies and dynamic load balancing.

- The Vertigo/CNAM research group (http://cedric.cnam.fr/vertigo) is actively involved in research on data and service management on the Web. Its current activity on this topic concerns XML data integration in large-scale repositories (e.g. Xyleme) and P2P architectures, views for easy access to heterogeneous XML data and P2P content management.

Research experience related to the proposal (systems, prototypes, publications, contracts) : Wisdom (LIP6 DB group and Vertigo) has a long research experience in XML query mediation, declarative XML views, P2P query routing, freshness-aware query processing, distributed query optimization and link-based ranking algorithms. Also, Cédric du Mouza, associated researcher in Wisdom, comes with his experience in modeling and querying time sequences. These research activities are currently supported by several national research grants (RNTL WebContent, ACI Respire, ACI Semweb) and have been validated by several prototypes and publications in national and international conferences and journals.

[GNP+07] Stéphane Gançarski, Hubert Naacke, Esther Pacitti, Patrick Valduriez: The leganet system: Freshness-aware transaction routing in a database cluster. Inf. Syst. 32(2): 320-343 (2007)

[LGV05] Cécile Le Pape, Stéphane Gançarski, Patrick Valduriez: Replica Refresh Strategies in a Database Cluster. BDA 2005

[CAG06] C. Constantin, B. Amann, D. Gross-Amblard, A Link-based Ranking Model for Services, CoopIS 2006, Montpellier, France.

[MAA+05] T. Milo, S. Abiteboul, B. Amann, O. Benjelloun, and F. Dang Ngoc. Exchanging intensional xml data. In SIGMOD, 2003. an extended version of this article has been published in ACM Transactions on Database Systels 30(1).

[LDG+05] N. Lumineau, A. Doucet,S. Gançarski. Thematic Schemas Building for Mediation-based P2P Architecture, International Workshop On Database Interoperability (InterDB 2005), Namur, Belgium, April 2005.

[BSS06] F. Boisson, M. Scholl, I. Sebei et D. Vodislav. Scalability of source identification in data integration systems . In ACM/IEEE SITIS Conference, 2006. (ref. CEDRIC 1125)

[BSS06b] F. Boisson, M. Scholl, I. Sebei et D. Vodislav. Query rewriting for open XML data integration systems . In IADIS WWW/Internet, 2006. (ref. CEDRIC 1067)

[VCC06] D. Vodislav, S. Cluet, G. Corona et I. Sebei. Views for simplifying access to heterogeneous XML data . In CoopIS, pp. 72-90, Springer, 2006. (ref. CEDRIC 1066)

[MRS05] C. Du Mouza, P. Rigaux et M. Scholl. Efficient Evaluation of Parameterized Pattern Queries. In ACM Conference on Information and Knowledge Management (CIKM), 2005

Projet participation : Wisdom is the project coordinator and the coordinator of workpackages “WP0: Project Management”, “WP1. System achitecture”, “WP2. RSS Model and Algebra” and “WP5. Feed generation and composition”.

LSIS

Web site : http://www.lsis.org

Description : LSIS (Information and System Sciences Laboratory) is a research laboratory in Automatics and Computer Science, with about 180 members (among which 80 Ph.D. students). Its research focuses on Sciences and Technologies for Information and Communication (STIC) and control theory centered on the design and the analysis of artificial systems. Research topics are distributed information and knowledge; Computer-aided modeling, design and reconstruction; Inference, constraints and application; control and simulation and engineering, mechanics, systems;

Research experience related to the proposal (systems, prototypes, publications, contracts) : The research topics of the members of LSIS (J. Le Maitre, Elisabeth Murisasco and Emmanuel Bruno) are XML data models and query languages since SGML in the 90's. In this context they developed two functional languages dedicated to the manipulation of electronic documents(SgmlQL dedicated to SGML documents and DQL for XML well formed XML Data). We also worked on the the conception of an XML based application to index and query images described as MPEG-7 documents (project RNTL MUSE 2000-2002), and on the modelisation and manipulation of time in XML data (French “Action spécifique « Les temps dans le document numérique» - RTP 33”, Documents et Contenu: création, indexation, navigation). More recently, they proposed an extension of the XML data model and of XQuery dedicated to multistructed XML documents (several structures on the same text (ACI Semweb 2004-2007). In the last project, they developed an XQuery prototype. This prototype will be used in the current project.

[BCM07] E. Bruno, S. Calabretto, E. Murisasco « Documents textuels multistructurés : un état de l’art », Revue I3 (Information - Interaction - Intelligence), mars 2007. à paraître.

[BM06] E. Bruno, E. Murisasco « Describing and Querying hierarchical structures defined over the same textual data », in Proceedings of the 2006 ACM Symposium on Document Engineering (DocEng 2006), pp. 147-154, Amsterdam, The Netherlands, October 2006.

[LeM06] J. Le Maitre, “Representing multistructured XML documents by means of delay nodes”, Proceedings of the 2006 ACM Symposium on Document Engineering (DocEng 2006), Amsterdam, The Netherlands, October 2006, pp. 155-164.

[BM06] E. Bruno, E. Murisasco « MSXD : a model and a schema for concurrent structures defined over the same textual data », in Proceedings of the DEXA Conference, Lecture Notes in Computer Sciences 4080, pp. 172-181, Krakow, Poland, September 2006.

[LeM05] J. Le Maitre, “Indexing and Querying Content and Structure of XML Documents According to the Vector Space Model”, Proceedings of the IADIS International Conference WWW/Internet 2005, Lisbon, Portugal, October 2005, vol. II, pp. 353-358.

[BML04] E. Bruno, E. Murisasco, J. Le Maitre, « Temporalisation d’un document multimédia », dans Document Numérique, vol. 8, n° 4, pp. 125-141, décembre 2004.

[LMB04] J. Le Maitre, E. Murisasco et E. Bruno, « Recherche d’informations dans les documents XML », dans Méthodes avancées pour les systèmes de recherche d’informations, Traité STI, M. Ihadjadene (Ed.), Hermès, pp. 35-54, mars 2004

[BLM03] E. Bruno, J. Le Maitre and E. Murisasco, « Extending XQuery with Transformation Operators », Proceedings of the 2003 ACM Symposium on Document Engineering (DocEng 2003), ACM Press, Grenoble, France, November 20-22 2003, pp. 1-8.

[BLM02] E. Bruno, J. Le Maitre, E. Murisasco, Indexation et interrogation de photos de presse décrites en MPEG-7 et stockées dans une base de données XML, Ingénierie des systèmes d’information, vol. 7, n° 5-6, 2002, pp. 169-186.

Project participation: In this project we will be involved in the definition of a formal model for RSS feeds and a dedicated algebra (WP2),the definition of an extension of XQuery for RSS feeds (WP3) and the automatic definition of new RSS feeds as XQuery view by composition of legacy data and existing RSS feeds (WP5). We will also be concerned by the optimization of such queries in a P2P environment (WP4).

Participation in other projects: E. Murisasco and E. Bruno participate to two other proposals under submissions (ANR MCDO, ANR SHS). One project under submission (AMETIS) use the same prototype to study the query of distributed satellite images extended with metadata described in XML (MPEG7, RDF, OWL).

J. Le Maitre participate to the the AVEIR Project (MDCA 2006-2009) where he works more specifically on Information Retrieval in XML documents.

J. Le Maitre, E. Murisasco and E. Bruno also participate to a second project under submission (ACoMod), they will work on an extention of XQuery dedicated to the manipulation of multimodal linguistic data (combined XML modelisation of audio, video, speech and gesture).

Prism

Web site : http://www.prism.uvsq.fr

Description :

The PRiSM research laboratory (UMR 8144) is a component of the University of Versailles St-Quentin. The laboratory is specialized in Parallelism, Networking, DBMS, cryptography, and Performance Modelling. The laboratory is composed of eight themes and has more than 50 permanent researchers. The part of the laboratory involved in the current project is the team SBD (Système de Bases de Données) which has a long research experiences in relational database systems, and in XML query mediation.

Research experience related to the proposal (systems, prototypes, publications, contracts) :

The PRISM Database Mediation team has been working since 1997 on mediation with XML as global model for integration. A research prototype of an XQuery mediator has been developed and marketed under the name XLive. Several companies have industrialized this prototype, among them Odonata (http://www.odonata.fr) and Datadirect (http://www.datadirect.com/products/xquery/index.ssp) The Odonata industrial open source version is available on the site http://xquare.objectweb.org/. The research activity has produced several publications, describing the mediation architecture, the query optimization algorithms, or the data model. The XLive research prototype is also available in open source and has evolved towards P2P mediation of Web sources. The research team has experimented several indexing techniques for XML and complex data in a P2P network. An internal representation model (called TGV) for XQuery plan optimization in centralized architecture has been recently concretized by a thesis. These research activities have been developed in several national research grants (RNTL Muse, ACI SemWeb) and currently in the national research project WebContent (RNTL WebContent,). These research have been also developed in Europeen project (Satine). These works constitute solid bases for the future development of a mediation architecture based on RSS feeds.

- Florin Dragan, Georges Gardarin, Benjamin Nguyen, Laurent Yeh: On Indexing Multidimensional Values In A P2P Architecture. BDA 2006

- Clément Jamard, Georges Gardarin: Indexation de vues virtuelles dans un médiateur XML pour le traitement de XQuery Text. EGC 2006: 65-76

- Georges Gardarin, Florin Dragan, Laurent Yeh: P2P Semantic Mediation of Web Sources. ICEIS (1) 2006: 7-15

- Clément Jamard, Georges Gardarin: Extending an XML Mediator with Text Query. WEBIST (1) 2006: 38-45

- Georges Gardarin, Laurent Yeh: SIOUX: An Efficient Index for Processing Structural XQueries. DEXA 2005: 564-575

- Florin Dragan, Georges Gardarin, Laurent Yeh: MediaPeer: A Safe, Scalable P2P Architecture for XML Query Processing. DEXA Workshops 2005: 368-373

- Florin Dragan, Georges Gardarin: Benchmarking an XML Mediator. ICEIS (1) 2005: 191-196

- Laurent Yeh, Georges Gardarin: Indexing XML Objects with Ordered Schema Trees. BDA 2004: 21-45

- Tuyet-Tram Dang-Ngoc, Georges Gardarin, Nicolas Travers: Tree Graph Views: On Efficient Evaluation of XQuery in an XML Mediator. BDA 2004: 429-44

- Tuyet-Tram Dang-Ngoc, Huaizhong Kou, Georges Gardarin: Integrating Web Information with XML Concrete Views. Databases and Applications 2004: 268-273

- Georges Gardarin, Tuyet-Tram Dang-Ngoc: Mediating the semantic web. EGC 2004: 1-14

Projet participation : According to our previous experiences, we are deeply involved in the WP4 for studying various strategies of XQuery plan execution in a P2P/RSS infrastructure. We are also concerned by the WP3 for the API requirements, and for the design of a basic XQuery adapter for the RSS feeds. In this work package, we should contribute by our experiences on heterogeneous sources integration. We are also involved in the WP1 and WP2 for the definition of a RSS feed integration framework which should be deployed on the infrastructure defined in the WP1.

2or3things

Web site : 2or3things

Description :

Blastfeed is an initiative launched by 2or3things, a French start-up founded in June 2006 and based in Paris.

Blastfeed lets users select the sources of information they are interested in ( i.e. RSS feeds), define what they want to look for in them (i.e sift information) and get results in an amalgamated format whether it is delivered by email, by RSS or IM, or web services or HTTP Post or any other output mechanisms. Delivery can be scheduled real-time or deferred. Once set everything runs on a continuous basis. Blastfeed can be seen as a feed aggregator, a feed filtering tool and a delivery service (PUSH or notification).

As providers of RSS-based services, we have of course a thorough knowledge of RSS in all its format. Our technical expertise ranges from Java, LAMP, Ruby on Rails, RSS, XML database (all of these being the backbone of Blastfeed by the way), blog platforms to Linux admin, web services (SOAP / REST). From a business standpoint, our goal is to develop further services for the enterprise around the Blastfeed platform.

Projet participation :

We mainly participate in WP3, with the development of a full API exposing the current services of Blastfeed, and in WP6, where we'll help build the validation and test application. This application will take some features from another application we have released recently about the French presidential campaign. The test application will be enhanced with the various developments subject of this project. We'll also follow and help the project management and coordination.

This said 2or3things are actively working on other services based on the Blastfeed platform. At this stage we are not involved in any other project. One of our goals with this project is to benefit of the R&D as to be in a position to better manage, exploit and use RSS going forward.