Outils pour utilisateurs

Outils du site

Panneau latéral

Table des matières

2 pages au maximum. On précisera, en particulier, la position du projet par rapport à la concurrence nationale et internationale, en donnant les références nécessaires. Pour les projets à vocation appliquée, on décrira également le contexte économique dans lequel se situe le projet en présentant une analyse du marché, de ses tendances,…

This section presents the general context and certain research activites related to the project. The state-of-the art is completed in the presentation of each workpackage.

Bernd : pour gagner de la place j'enlève cette partie (qui est trop générale) : Un réseau P2P désigne un modèle de réseau informatique dont les éléments (les pairs) ne jouent pas exclusivement les rôles de client ou de serveur mais fonctionnent des deux façons tous en assurant chacun le fonctionnement de l'ensemble. Ces architectures se distinguent des grilles de calculs par le fait que le nombre de pairs est très important et que ceux-ci peuvent joindre ou quitter le réseau fréquemment voire brutalement.

Le principe des systèmes pair à pair est ainsi la mise en commun par un

grand nombre de participants indépendants, de leurs ressources (les données, mais aussi leur traitement) afin de

fournir un service commun. Les systèmes pair à pair sont souvent plus tolérants aux pannes, passent plus facilement à l'échelle, et

sont plus adaptatifs que leurs contreparties client/serveur.

Les versions les plus simples dites “non structurées” (Gnutella, KaZaA) posent des problèmes de performance. Une première alternative à été proposée en dédiant certains noeuds à des tâches de gestion (l'arrivée et le départ des pairs, la répartition des tâches, un index centralisé des données sur des super peers), le système devient alors partiellement centralisé (Napster). Les problèmes de l'architecture client/serveur peuvent alors réapparaitre.

D'autres architectures “auto organisées” (Chord, Pastry, CAN) ont été proposées, elles s'appuient principalement sur un index (par exemple une table de hachage distribuée appellée DHT) partagé dynamiquement entre les pairs. Cependant, cette approche supporte mal les déconnexions brutales de pairs. Une solution pratique possible à ce problème a été proposée en répliquant des fragments de cet index sur plusieurs pairs (Plateforme logicielle JXTA proposée par Sun).

Les applications classiques de description et d'échanges de données (avec le langage XML) et d'interrogation de données (avec le langage XQuery) doivent alors être adaptées à ces nouvelles architectures. C'est en particulier le cas des outils de gestion des flux RSS qui s'appuient sur XML.

Economical context : the "Bloggy" Web

Web content syndication refers to publishing so called feeds in order to inform other people or applications about recently added or modified content on a web site. Today many different types of content is syndicated on the Internet and millions of individuals and online publishers including search engines, newspapers, commercial web sites, television and radio stations now publish their blog postings, query results, latest news headlines or product offers in a standard feed format (RSS).

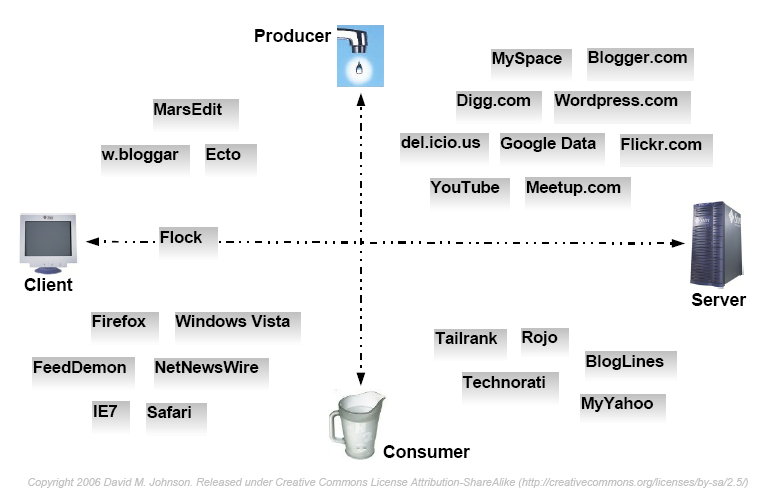

The following figures illustrate the role of web content syndication technology on the Web (version 2.0). Web publishing technologies are separated in two dimensions (producer/consumer, client/server). The upper part of the figure shows tools for producing RSS feeds using locally installed client software (left part) or web portals (right par). The lower part does the same separation for tools and environments for subscribing to and observing feeds.

Copyright 2006 David M. Johnson. Released under Creative Commons License Attribution-ShareAlike (http://creativecommons.org/licenses/by-sa/2.5/)

Copyright 2006 David M. Johnson. Released under Creative Commons License Attribution-ShareAlike (http://creativecommons.org/licenses/by-sa/2.5/)

As can be seen in this figure, web syndication features have been integrated into web browsers (firefox, IE), mail readers (thunderbird), web portals (Myyahoo, YouTube), search engines (Google) and even operating systems (Windows Vista). The proposed project aims at studying the different functionalities proposed by these systems from a database point of view and to propose new infrastructures based on existing XML data management technology.

Technical Context : RSS

The two main web feed formats are RSS and Atom. Both of them use XML as publishing syntax. The initials “RSS” are variously used to refer to “Really Simple Syndication” (RSS 2.0 [RSS]), Rich Site Summary (RSS 0.91, RSS 1.0) and RDF Site Summary (RSS 0.9 and 1.0). RSS is based on the RDF model using an abbreviated XML syntax. Current RSS applications do not exploit the full power of RDF for specifying semantic web graphs and most RSS documents can be considered as simple XML document trees that can be queried by standard XML query languages (XQuery/XPath). In reaction to recognized issues with RSS (and because RSS 2.0 is frozen), a new syndication specification, Atom, was later adopted by the Internet Engineering Task Force (IETF) leading to the publication of a specification (RFC 4287) for the Atom Format in 2005. Supporters of Atom claim that it improves on RSS by relying on standard XML features, by specifying a payload container that can handle many different kinds of content unambiguously, and by having a specification maintained by a recognized standards organization. Critics claim that Atom unnecessarily introduces a third branch of syndication specifications, further confusing the marketplace. RSS seems currently to be more popular than ATOM.

The choice of an XML syntax facilitates the generation and usage of RSS feeds. In particular, it is very easy to generate RSS feeds dynamically from some database using PHP/SQL or XML/SQL publication tools. Generally, feeds are subscribed to directly by users with aggregators or feed readers, which combine the contents of multiple web feeds for display on a single screen or series of screens. Some modern web browsers (Mozilla Firefox et Thunderbird, Opera, Microsoft IE) and operating systems (Windows Vista) incorporate aggregator features and encourage their users to use RSS syndication within other applications. Depending on the aggregator, users typically subscribe to a feed by manually entering the URL of a feed or clicking a link in a web browser, but there also appear feed specific search engines like Plazoo, FeedsForMe and Blogdigger.

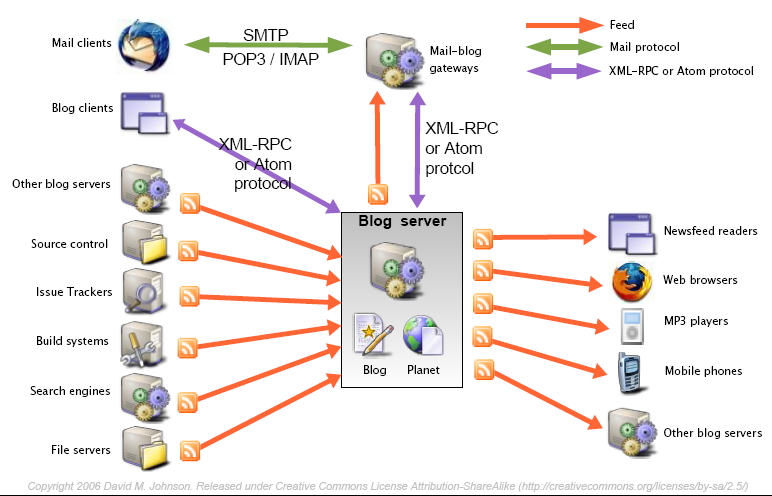

Figure Fig. 2 illustrates how web syndication enables the aggregation and publishing of web information based on a Blog or feed server. The basic approach is to consider RSS as a standard wrapper interface for integrating web content sources.

Copyright 2006 David M. Johnson. Released under Creative Commons License Attribution-ShareAlike (http://creativecommons.org/licenses/by-sa/2.5/)

Copyright 2006 David M. Johnson. Released under Creative Commons License Attribution-ShareAlike (http://creativecommons.org/licenses/by-sa/2.5/)

From a database point of view, Blog or feed servers implement sophisticated web data management functionalities for acquiring, refreshing, storing, indexing, querying and exporting feed data. This project tries to analyse these functionalities and to propose new solutions for efficiently implementing these functionalities. In particular we are interested in the possibility of composing feed servers in a distributed environment.

[RSS] RSS 2.0 Specification, http://www.rssboard.org/rss-specification

[Atom] The Atom Syndication Format, IETF RFC 4287, http://tools.ietf.org/html/rfc4287

[Plazoo] http://www.plazoo.com/

[Feedsforme] http://www.feedsforme.com/

[Blogdigger] http://www.blogdigger.com/

=== Data stream query processing ===

RSS feeds can logically be considered as a certain kind of XML data streams. Data stream systems (Tapestry, Alert, XFilter, Xyleme, NiagaraCQ, STREAM) address the issue of querying multiple, continuous, rapid, time-varying streams of data using fixed memory. These systems generally distinguish between one-time (transient) queries evaluated once over a point-in-time snapshot of a data set and continuous queries evaluated continuously as data streams continue to arrive. Continuous query results may be stored and updated as new data arrives, or may produce data streams themselves. RSS filtering can be considered as a special case of continuous queries observing a stream of XML documents. Our main goal is to apply existing stream query evaluation techniques (sliding windows) emphasizing recent data, which is more important than old data. More practically speaking, we consider that more complex (ad-hoc) queries are evaluated on data stored in some repository. An important issue in this will be to efficiently manage data acquisition and storage for providing fresh and complete results (workpackage 3).

[KS03] N. Koudas, D. Srivastava, Data Stream Query Processing, VLDB tutorial, 2003

[GO03] L. Golab, T. Özsu, Issues in data stream management, SIGMOD record 2003

[BB02] B. Babcock, S. Babu, M. Datar, R. Motwani, J. Widom, et.al. Models and Issues in Data Stream Systems, PODS 2002

=== XML indexing techniques ===

Indexing XML documents requires two types of indexes that can be merged: structure index (i.e, tags) and value index (i.e, keywords). Structure indexes take into account the structural dimension of data in a more sophisticated way than simple routing techniques based on file names (the majority of these works are based on DHTs (Ratnasamy, 2001), (Stoica, 2001)). To index paths and values in P2P networks, two approaches can be distinguished. The first approach applies to data of vector type. Paths and values are mapped to vectors. Distributed indexes based on vectors are useful to localize objects according to several properties (for example, localize all machines having a CPU>500Mhz and a memory MEM > 1Go). Most works in these vein are based on mapping functions (as z-order) which reduce multidimensional data into mono-dimensional data. The result can be indexed in a P2P network using traditional DHT techniques.

The second approach aims at indexing trees. Trees generally summarize XML documents based on dataguides, generalized DTDs, or schemas. The leaves of the tree may include values or intervals. Extended B-trees or Patricia trees may be used to develop the path indexes on super-peers. In the XML documents framework, ontology often provides additional data used for mapping XML documents to some common model. An ontology enables to solve the problem of routing in the network for finding documents with heterogeneous structures (Bernstein, 2002) (Halevy, 2003) (Nejdl, 2003). In all cases, indexing graphs in P2Pnetworks requires a decomposition process in atomic elements which vary from nodes, edges (KadoP) until a set of paths (XPeer). These elements can be indexed using DHTs or order preserving indexes as developed in (Papadimos, 2003), (Sartiani, 2003), or (Guilder 2005).

P2P query evaluation and mediation systems

Query mediation on a P2P network has been recently explored. Piazza (Halevy, 2003) proposes a P2P infrastructure for sharing and mediating XML and RDF sources. XML peers export XML schemas describing local sources while RDF sources export OWL ontologies. When a peer joins the network, it has to provide mappings between his local schema or ontology and some foreign schema or ontology. Piazza provides support for two methods for semantic mediation: mediated mapping, where data sources are related through a mediated schema or ontology, and point to point mappings, where a local schema is directly mapped to the schema of another site. Mappings are expressed in a language derived from XQuery.

When a user poses a query on a peer, it uses its local schema. The query is first mapped to the local data stored at the node. Next, the routing algorithm determines all the neighbours of the peer (i.e., nodes related by semantic mappings), reformulates the query for them, and passes the modified queries to them. The recursive processing of a query until no remaining useful links are discovered guarantees the exploration of all sources that are relevant. Sources return partial answers that have to be integrated. In summary, Piazza covers a large variety of mappings with a complex query processing algorithm.

SomeWhere (Adjiman, 2005) is another project dealing with semantic mediation. It is similar to Piazza, but uses description logic to define mappings between ontologies. Queries are routed according to the relevant mappings. In P2P data systems, notably for semantic query routing and data integration, metadata are keys. Focusing on metadata standards, Edutella (Nejdl, 2003) is a multi-staged effort to efficiently handles RDF metadata on top of JXTA, an open source plate-form for supporting P2P applications leaded by Sun. Edutella provides several services, among them a query service and a mapping service, to respectively retrieve metadata and translate between different vocabularies. This infrastructure provides an appropriate routing algorithm for semantic queries based on RDF standards for the description of sources. The routing process uses a network based on a HyperCup (Schlosser, 2002), which is capable of organizing peers from a P2P network into a recursive graph structure. Edutella should provide an example of routing process based on the semantic of data.

KadoP is a system for sharing data and knowledge content in a peer to peer environment. KadoP’s data model annotates published XML documents by using two kinds of items: data item for structural description and semantic items to combine with an ontology. These items are indexed using Pastry DHT. The KadoP query language uses tree pattern, whose nodes represent data items, and whose edges represent containment relationships among the nodes. The query processing is based on ActiveXML where all data items are only retrieved on demand, by activating a Web service call execution in the P2P network.

XLive is a light XQuery mediator. It deals on XML views of heterogeneous data sources using wrappers and adapters that cope with the heterogeneity of the sources (DBMS, Web pages, files, etc.). The data are integrated dynamically from multiple information sources. Queries are used as view definitions. During run-time, the application issues XML queries against the views. Queries and views are translated into an internal algebra and are combined into single algebra query plans. Sub-queries are sent to local wrappers that process them locally and return XML results. Finally, the global query processor evaluates the result, using appropriate integration and reconstruction algorithms. XLive is being extended to discover relevant sources dynamically in a P2P network.

[Koss00] D. Kossmann, The State of the art in distributed query processing. ACM Computing Surveys 32(4): 422-469 (2000)

[GWJ+03] L. Galanis, Y. Wang, S. R. Jeffery and D. J. DeWitt, Locating data sources in large distributed systems, in proceedings of the 29th VLDB conf., Germany, 2003

[RBH+04] C. Re, J. Brinkley, K. P. Hinshaw and D. Suciu. Distributed XQuery, in proceedings of the VLDB Workshop on Information Integration on the Web (IIWeb-2004) pp 116 121

[BCM+04] A. Bonifati, A. Cuzzocrea, U. Matrangolo and M. Jain, XPath Lookup queries in P2P networks, in proceedings of the 6th ACM International Workshop on Web Information and Data Management (WIDM 2004) 2004, Washington, DC, USA

[KHS04a] M. Karnstedt, K. Hose and K. Sattler, Distributed query processing in P2P systems with incomplete schema information, in proceedings of the int. Conf. CAiSE (Workshop DIWeb'04), Riga, Latvia, 2004.

[KHS04b] M. Karnstedt, K. Hose and K. Sattler, Query Routing and Processing in Schema-Based P2P Systems, in proceedings of the Int. Workshop Grid and Peer-to-Peer Computing Impacts on Large Scale Heterogeneous DB Systems (GLOBE'04 icw DEXA), Sep 2004, Zaragoza, Spain.

[FHD+06] Leonidas Fegaras, Weimin He, Gautam Das, and David Levine. XML Query Routing in Structured P2P Systems. DBISP2P 2006. Seoul, Korea. September, 2006.

=== RSS feed ranking ===

Defining score functions for ranking RSS feeds and feed items

according to some relevant user or application criteria is an

important issue. The ranking score of a feed or feed item can be etimated by

the feed contents (content-based relevance), but also by exploiting

structural, relational (link-based authority) and temporal properties (age).

Content-based scoring like the well-(know tf*idf) has been applied

with success in standard document retrieval systems. Pure content

based scoring has been combined with structured scoring functions in

the context of XML document retrieval [AKM+05,GBS03,MKS03].

Link-based ranking methods like PageRank [PBM+98] and HITS [Kle99]

have been developed and applied with success for ranking Web search

results. The basic idea of this family of ranking models and

algorithms is to define the importance or authority of a web page by

an estimated probability that it will be visited by some random

surfer. Web browsing is considered as discrete-time stochastic process

which can be modeled as a Markov Chain where each web page is a random

variable with the Markov property : the present state (current page)

is independent of the past state (previous page) and the future state

(next page) and each web link is labeled by an estimated probability

of going from one state (source page) to the other state (target

page). More recent link based approaches also consider time-sensitive

authority scores measures [CHLS04,BVW04] taking into consideration the

temporal evolution of web links. [CGM05] exploits temporal information

for ranking news and their sources. A recent article is more important

than an old one, and a source that produced recent important news is

more important than other sources. [CAG06] applies a similar approach

to web service call graphs where each service call represents a

temporal link between two web services. Recent work on

context-sensitive ranking [ART06] taking into account user preferences

and object-based ranking [CGH+06] exploiting the relationships between

structured data and documents (e.g. dynamic web pages) also are

relevant to the problem of ranking RSS feeds.

[AKM+05] Sihem Amer-Yahia, Nick Koudas, Amélie Marian, Divesh

Srivastava, David Toman, Structure and Content Scoring for XML,

VLDB 2005.

[GBS03] Lin Guo, Feng Shao, Chvdar Botev, Jayavel

Shanmugasundaram, XRank: Ranked Keyword Search over XML

Documents, SIGMOD 2003.

[CMKS03] Sara Cohen, Jonathan Mamou, Yaron Kanza, Yehoshua

Sagiv, XSearch : A Semantic Search Engine for XML, VLDB 2003.

[ART06] R. Agrawal, R. Rantzau, E. Terzi: Context-sensitive

ranking. SIGMOD Conference'06, pages 383-394, 2006.

[Kle99] J. M. Kleinberg. Authoritative Sources in a Hyperlinked

Environment. J. ACM, 46(5):604-632, 1999.

[PBM+98] L. Page, S. Brin, R. Motwani, and T. Winograd. The

PageRank Citation Ranking: Bringing Order to the Web. Technical

report, Stanford Digital Library Technologies Project, 1998.

[CGM05] G. M. D. Corso, A. Gulli, and F. Romani. Ranking a

Stream of News. In Proc. Intl. World Wide Web Conference (WWW),

pages 97-106, 2005.

[CGH+06] K. Chakrabarti, V. Ganti, J. Han, D. Xin: Ranking

objects based on relationships. SIGMOD Conference'06, pages

371-382, 2006.

[BVW04] K. Berberich, M. Vazirgiannis, and G. Weikum, T-Rank:

Time-Aware Authority Ranking. WAW 2004: 131-142, 2004.

[CHLS04] Einat Amitay, David Carmel, Michael Herscovici, Ronny

Lempel, Aya Soffer, Trend detection through temporal link

analysis, JASIST 2004.

[CAG06] Camelia Constantin, Bernd Amann, David Gross-Amblard: A

Link-Based Ranking Model for Services. OTM Conferences (1) 2006:

327-344, 2006.